Python Scrapy 爬取豆瓣TOP250 相关电影信息

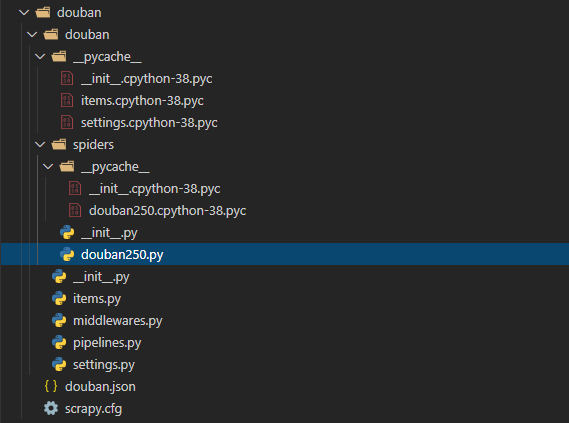

创建 Scrapy 项目

scrapy startproject douban 创建 爬虫 文件

scrapy genspider douban https://movie.douban.com/top250目录结构

开始编写爬虫

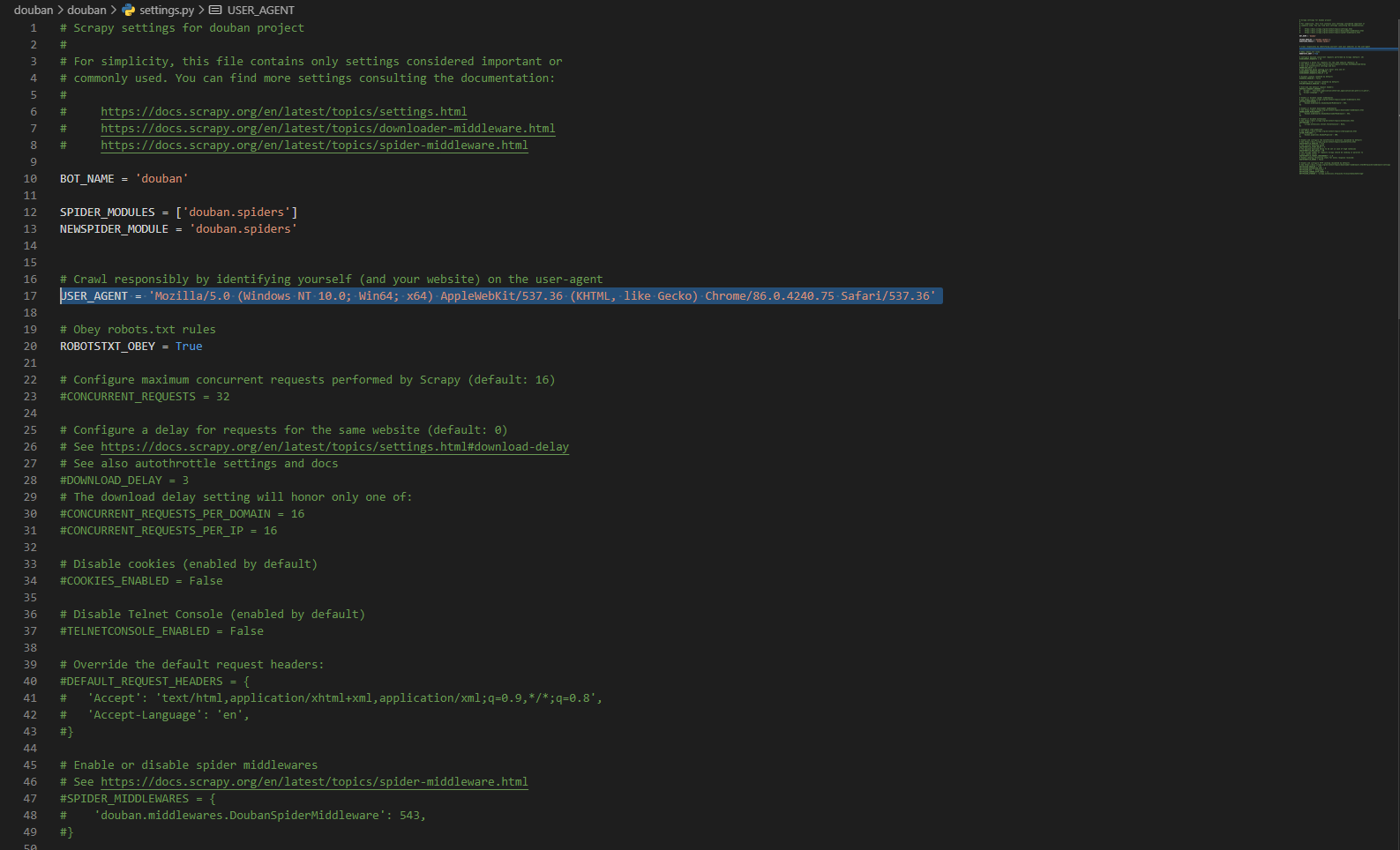

设置 USER_AGENT

如果不设置 USER_AGENT 将无法爬取数据

修改 settings.py

# ··· 代码过长省略 ···

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.75 Safari/537.36'

# ··· 代码过长省略 ···

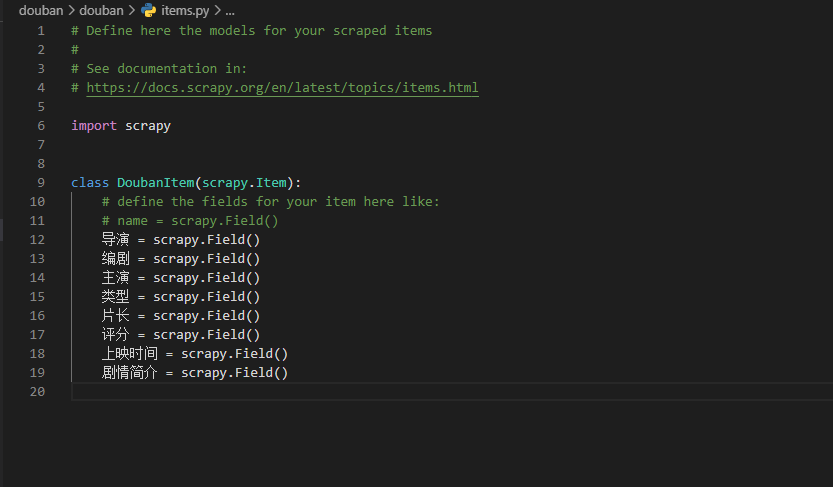

修改 items.py

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

导演 = scrapy.Field()

编剧 = scrapy.Field()

主演 = scrapy.Field()

类型 = scrapy.Field()

片长 = scrapy.Field()

评分 = scrapy.Field()

上映时间 = scrapy.Field()

剧情简介 = scrapy.Field()

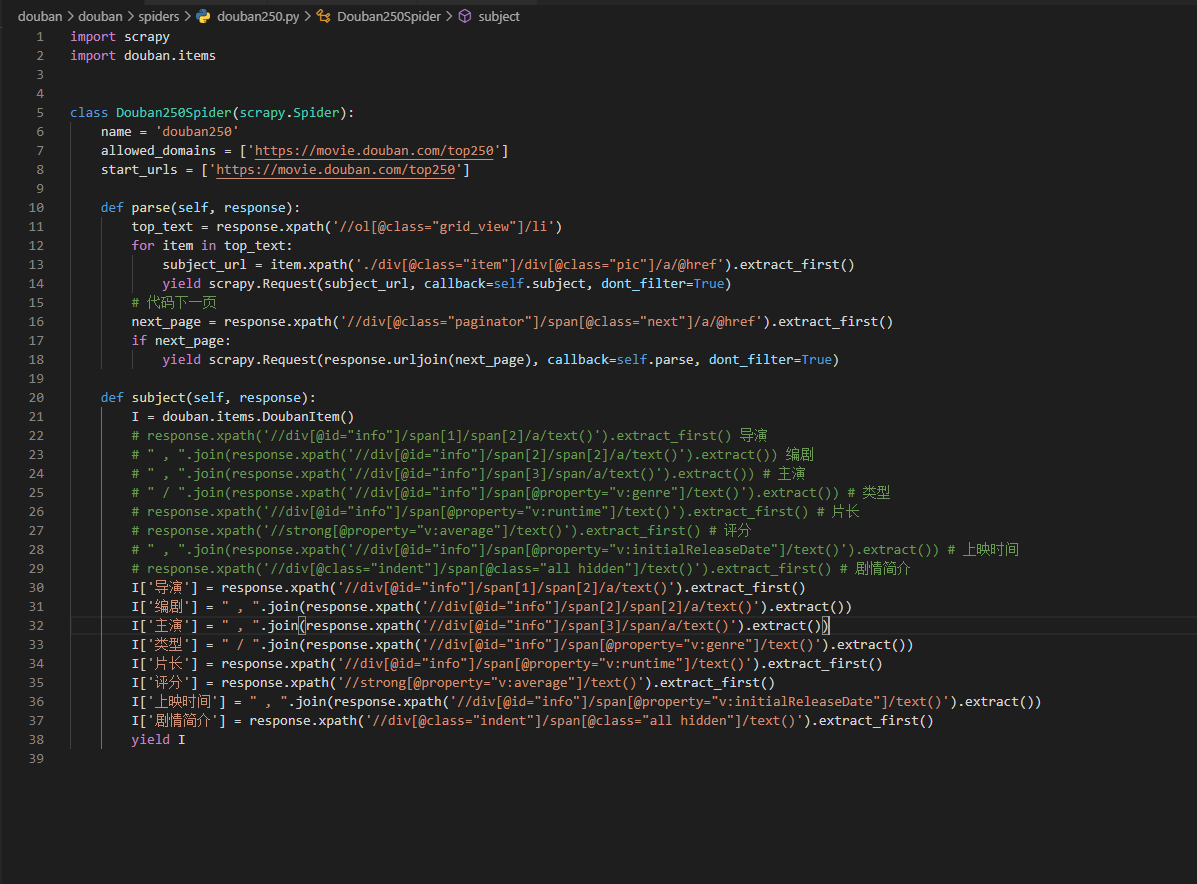

编写代码

import scrapy

import douban.items

class Douban250Spider(scrapy.Spider):

name = 'douban250'

allowed_domains = ['https://movie.douban.com/top250']

start_urls = ['https://movie.douban.com/top250']

def parse(self, response):

top_text = response.xpath('//ol[@class="grid_view"]/li')

for item in top_text:

subject_url = item.xpath('./div[@class="item"]/div[@class="pic"]/a/@href').extract_first()

yield scrapy.Request(subject_url, callback=self.subject, dont_filter=True)

# 代码下一页

next_page = response.xpath('//div[@class="paginator"]/span[@class="next"]/a/@href').extract_first()

if next_page:

yield scrapy.Request(response.urljoin(next_page), callback=self.parse, dont_filter=True)

def subject(self, response):

I = douban.items.DoubanItem()

# response.xpath('//div[@id="info"]/span[1]/span[2]/a/text()').extract_first() 导演

# " , ".join(response.xpath('//div[@id="info"]/span[2]/span[2]/a/text()').extract()) 编剧

# " , ".join(response.xpath('//div[@id="info"]/span[3]/span/a/text()').extract()) # 主演

# " / ".join(response.xpath('//div[@id="info"]/span[@property="v:genre"]/text()').extract()) # 类型

# response.xpath('//div[@id="info"]/span[@property="v:runtime"]/text()').extract_first() # 片长

# response.xpath('//strong[@property="v:average"]/text()').extract_first() # 评分

# " , ".join(response.xpath('//div[@id="info"]/span[@property="v:initialReleaseDate"]/text()').extract()) # 上映时间

# response.xpath('//div[@class="indent"]/span[@class="all hidden"]/text()').extract_first() # 剧情简介

I['导演'] = response.xpath('//div[@id="info"]/span[1]/span[2]/a/text()').extract_first()

I['编剧'] = " , ".join(response.xpath('//div[@id="info"]/span[2]/span[2]/a/text()').extract())

I['主演'] = " , ".join(response.xpath('//div[@id="info"]/span[3]/span/a/text()').extract())

I['类型'] = " / ".join(response.xpath('//div[@id="info"]/span[@property="v:genre"]/text()').extract())

I['片长'] = response.xpath('//div[@id="info"]/span[@property="v:runtime"]/text()').extract_first()

I['评分'] = response.xpath('//strong[@property="v:average"]/text()').extract_first()

I['上映时间'] = " , ".join(response.xpath('//div[@id="info"]/span[@property="v:initialReleaseDate"]/text()').extract())

I['剧情简介'] = response.xpath('//div[@class="indent"]/span[@class="all hidden"]/text()').extract_first()

yield I

运行爬虫

# 运行爬虫

scrapy crawl douban250

# 运行爬虫并保存爬取数据为 douban.json

scrapy crawl douban250 -o douban.json

评论(0)